Post by : Anees Nasser

In recent years, AI technologies have seen unprecedented growth, often outpacing the establishment of relevant laws. This rapid advancement has allowed for powerful AI systems to enter consumer markets without sufficient supervision. As these technologies evolved to make decisions, process personal data, and create content resembling human output, global concerns escalated.

Consequently, governments worldwide began pressing the discussion on whether AI should be classified as a consumer product, a public utility, or even a potential national-security issue. The urgency for regulations has surged month after month, culminating in the onset of what experts describe as the regulated era of AI, emphasizing safety, transparency, and accountability.

The repercussions will not only touch large enterprises but will also infiltrate our homes, workplaces, schools, and daily activities.

To comprehend the impending changes in AI regulation, it's crucial to grasp just how integrated these tools have become in our lives.

Every time an email application forecasts your next sentence, or a chatbot provides immediate answers, AI is at work. Even basic spellcheck functions stem from machine learning across vast datasets.

Recommendation algorithms on streaming platforms operate based on sophisticated learning mechanisms, adapting to user preferences based on behavior such as pauses and skips.

Fraud alerts, tailored spending insights, and loan evaluations heavily rely on AI-driven risk assessment techniques.

From navigation to ride-sharing, machine learning plays a key role in route optimization and traffic management.

Online shopping platforms utilize consumer behavior analysis to suggest products and tailor user experiences.

Facial recognition, photograph enhancement, voice recognition, battery efficiency, and app management all utilize AI technologies.

Due to this fusion, even minor alterations in AI regulations can have significant impacts on daily life.

AI systems operate by scrutinizing user data, encompassing activities, voice recordings, location history, and more. Regulators advocate for clarity over data utilization.

AI platforms can unintentionally favor certain demographics due to biased datasets. Legislation aims to promote equity and prevent discrimination.

Advanced AI systems, particularly generative and predictive models, can produce inaccuracies with severe consequences. Governments demand stringent testing prior to deployment.

When harm occurs due to AI, the question arises: who is accountable? The proposed regulations strive to clarify accountability in these scenarios.

There exist risks associated with advanced AI technology, including misinformation and cyber threats. Regulatory measures seek to mitigate these vulnerabilities.

Such issues inherently affect the AI applications we interact with daily.

Here’s how various categories of daily AI tools could evolve.

Messaging services may need to indicate when AI generates suggested responses or summaries, alerting users that a prompt was AI-generated.

Apps might be obligated to show clearer explanations of stored data practices. Users could receive notifications requiring consent before engaging AI features.

With restrictions on data usage, AI prediction capabilities may not accurately reflect individual writing styles.

Platforms might face restrictions in analyzing sensitive user traits, massively influencing the content users see.

Content altered by AI may require explicit labelling, affecting everything from edited images to videos.

Verification systems could be necessary to prevent minors from accessing inappropriate algorithm-based content.

If AI determines creditworthiness, financial institutions might be compelled to disclose the rationale behind loan decisions.

AI systems will undergo enhanced safety checks before they go live, potentially impacting detection efficiency.

With new laws, banks may no longer be able to utilize detailed data analytics to customize offerings.

Regulators may require mapping services to avoid shortcuts that are unverified, leading to more conservative travel estimates.

Ride-sharing algorithms may need justification for price fluctuations due to new clarity requirements.

Users might receive comprehensive details regarding how their location data is utilized and retained.

Should data usage tighten, AI-based shopping recommendations may lose their current specificity.

Retailers could be required to notify consumers if prices are adapted based on individual insights.

Platforms might need to authenticate AI-produced reviews or clearly label them as such.

In compliance with privacy regulations, voice recognition technologies may rely more on device-based analysis, limiting external data storage.

Voice-operated tools could be mandated to clarify when AI assists in any command.

Devices may restrict continuous listening features to minimize data collection.

Content created through AI may be required to have clear markers for authenticity.

These tools may be configured to prevent the generation of harmful content.

Companies may be obliged to disclose the data on which their models rely, enhancing user understanding.

Many workplaces rely on AI for productivity assessments; new regulations may restrict real-time monitoring.

Recruitment tools may not be permitted to analyze physical cues or sensitive information during interviews.

Artificial intelligence necessitating impactful decisions may legally require human supervision for fairness.

Users will see frequent labels and consent inquiries as AI becomes more visible in its operation.

Safety checks could lead to delays or restrictions on certain functionalities until compliance is ensured.

Higher confidence in AI comes under stricter regulations but may result in decreased personalization.

Users will find improved options for privacy, consent, and data visibility.

Expect new settings implemented once regulations take effect.

Some features will need your explicit consent; understanding these options will be advantageous.

Some functionalities may be removed or altered to align with compliance mandates.

These details will clarify when and how AI influences your interactions.

Heightened age verification and security measures may become commonplace.

AI will undoubtedly transform to become safer, more transparent, and accountable. However, innovation may decelerate as organizations prioritize compliance. Ultimately, AI will persist in shaping our reality; the new regulations will strive to enhance this landscape.

As everyday users, the most noticeable change will be heightened awareness. Tools that once functioned discreetly will now exhibit clear labels and controls. The era of "silent AI" is winding down, replaced by an age of more responsible AI usage.

This article serves solely for informational and educational objectives. It does not constitute legal or financial advice. AI regulations differ across regions and are subject to rapid evolution.

OpenAI Highlights Growing Cybersecurity Threats from Emerging AI Technologies

OpenAI has raised alarms about the increasing cyber risks from its upcoming AI models, emphasizing s

Manchester City Triumphs 2-1 Against Real Madrid, Alonso Faces Increased Scrutiny

Manchester City secured a 2-1 victory over Real Madrid, raising concerns for coach Xabi Alonso amid

Cristiano Ronaldo Leads Al Nassr to 4-2 Victory Over Al Wahda in Friendly Face-Off

Ronaldo's goal helped Al Nassr secure a 4-2 friendly win over Al Wahda, boosting anticipation for th

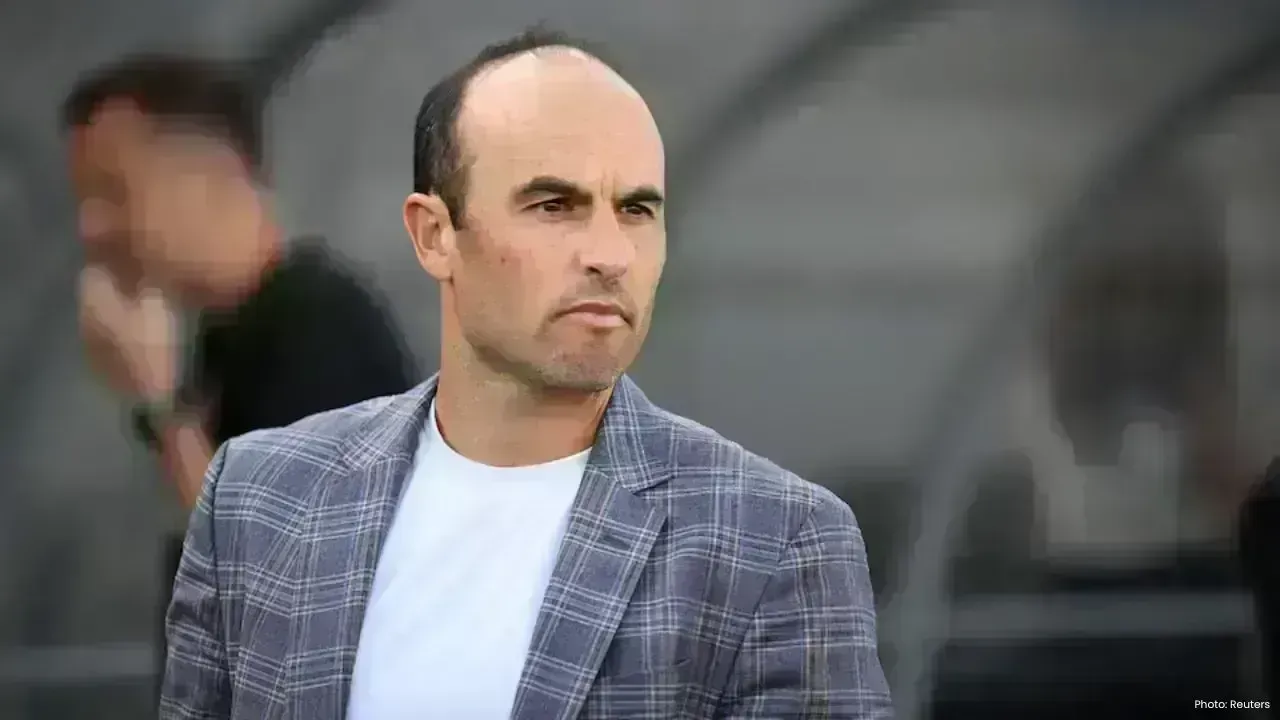

Landon Donovan Challenges Australia Coach on World Cup Prospects

Landon Donovan counters Australia coach Tony Popovic’s optimism for the World Cup, expecting an earl

Mercedes-Benz Forms Landmark Partnership with WTA

Mercedes-Benz and the WTA unveil a significant partnership effective January 2026, with major invest

Abhishek Addresses Divorce Rumours Concerning His Family

Abhishek Bachchan confirms that daughter Aaradhya remains oblivious to divorce speculations, focusin