Post by : Anees Nasser

Advances in artificial intelligence that can imitate human speech have once again become a focus of public concern. This week, reports of synthetic audio impersonating public figures and private individuals circulated widely, fuelling debate about consent, identity and the risks of misused voice technology.

Initially celebrated for its benefits in media and accessibility, voice cloning has moved to the centre of an ethical and legal contest. From fraudulent phone calls to fabricated interviews, the misuse of synthesized voices is producing tangible harms.

The current discussion extends beyond technical capability: it touches on trust, legal safeguards and the responsibilities of creators and platforms.

Several convincing AI-generated audio clips circulated this week, including fabricated statements attributed to political leaders and staged celebrity endorsements. One high-profile fake of a global figure spread rapidly across social channels before experts exposed it, underlining how realistic these outputs have become.

These incidents revived anxiety about the integrity of public discourse when vocal authenticity can be manufactured so precisely.

Technologies that once required significant resources are now widely obtainable through open-source projects and commercial services. Minimal audio samples — sometimes mere seconds — can be enough to train models that recreate another person's voice convincingly.

Even more worrying is the emergence of live-cloning capabilities that can alter a speaker’s voice during calls or video streams, creating new vectors for deception and fraud.

While politicians and entertainers attract attention, this week’s reports emphasised harm to ordinary people: scam victims receiving calls from convincingly imitated relatives, and others targeted in extortion schemes that exploited emotional manipulations.

Such real-world consequences propelled the topic into international conversations about regulation and consumer protection.

Voice cloning systems rely on deep neural networks to model a speaker’s unique vocal traits — pitch, cadence, accent and expressive cues. After training, these systems can synthesise speech that mirrors the source voice with disturbing fidelity.

Contemporary approaches combine text-to-speech pipelines with architectures such as GANs and transformer models to reproduce nuanced inflections and even breathing patterns.

Voice synthesis has clear positive uses: restoring communication for those who have lost speech, streamlining dubbing and enabling new creative formats. Yet the same ease and low cost that expand access also lower barriers for malicious actors.

By 2025, free or inexpensive online services can generate lifelike voice clones in minutes, dramatically widening the pool of potential misuse.

Central ethical dilemmas concern who controls a voice. If a person’s speech is used to produce a synthetic likeness without permission, does that constitute theft of identity or permissible reuse?

For performers and influencers, a voice is often integral to their professional identity. Unauthorized cloning threatens income and muddles legal accountability.

Distinguishing authentic speech from generated audio is increasingly difficult. When fabricated statements are presented as real — whether political claims, interviews or news — the reputational damage can be immediate and long-lasting.

This raises the normative question: even if we can recreate reality technologically, should we permit it without restraint?

Encountering a familiar voice delivering shocking or false content can cause distress. Mental-health professionals warn that repeated exposure to synthetic deception may erode interpersonal trust and public confidence in media.

Narrators, voice actors and broadcasters are facing possible displacement by digital replicas of their own voices. Industry groups and unions are beginning to draft protections to safeguard members from unconsented replication.

Faced with growing misuse, a number of governments proposed or introduced rules this week aimed at deepfake audio and synthetic media. Proposals include mandatory labelling of commercial synthetic content and criminal penalties for impersonation or fraud.

Globally, however, legal approaches remain uneven as lawmakers try to catch up with rapid technological development.

Existing copyright laws protect creative works but not biological traits like a human voice. Legal scholars are advocating for robust personality-rights frameworks that would recognise voice likeness as an aspect of personal identity.

Courts will need to decide how to allocate ownership and control over intangible vocal characteristics — a legal challenge likely to shape the coming decade.

Major AI providers are updating policies to prohibit non-consensual cloning and are experimenting with watermarking generated audio. Social networks are also developing detection systems to flag suspect clips before they spread widely.

Publicly shared recordings — podcasts, videos, and voice posts — provide data for model training. Limiting sample lengths and applying watermarks can reduce the risk of unauthorized cloning.

Voice professionals should explore registering their vocal identity with digital-rights services that create cryptographic markers or fingerprints, offering a way to prove ownership and detect misuse.

Emerging detectors analyse audio anomalies in frequency and timing to identify synthetic origins. Newsrooms and organisations are increasingly integrating these tools into verification workflows.

Creators and industry stakeholders need to advocate for explicit legal definitions of voice consent, which would simplify enforcement against bad actors.

Transparent disclosure when synthetic voices are used — for accessibility or creative reasons — helps maintain trust and differentiates ethical practice from deception.

Despite the controversies, voice synthesis remains a valuable tool: restoring speech for medical patients, speeding up multilingual dubbing, and reducing production costs while preserving artistic intent under proper licensing.

When used with consent and clear crediting, synthetic voices can complement rather than compete with human professionals.

Some artists are already monetising authorised voice models through transparent licensing, creating new revenue while retaining oversight. This points toward a future where voice IP is treated as a licensable digital asset.

Developers face increasing pressure to include inaudible signatures in algorithmically generated audio. Such markers could help trace and attribute synthetic content to its source.

Responsible companies should ensure consent when sourcing voice data for model training. Transparent provenance is both an ethical imperative and, in many jurisdictions, a regulatory requirement.

Research groups are building public verification platforms where users can submit suspicious audio for analysis. Wider access to these services could become an important defence against misinformation.

If vocal authenticity can be manufactured at scale, the capacity to uphold trust in journalism, governance and everyday communication is at stake. The issue extends from media ethics to national security considerations.

Targets of voice deepfakes report feelings akin to identity theft; the sense that an intimate personal trait can be co-opted undermines psychological safety in the digital era.

Technological capability is ethically neutral until applied. The central question is how stakeholders choose to use voice synthesis: to empower or to deceive.

Responsibility rests with developers, platforms and users alike to align innovation with social safeguards.

Voice cloning will continue to advance. The immediate task is to channel that progress toward frameworks that protect rights, preserve trust and enable beneficial uses. Industry consortia are beginning work on ethics guidelines combining transparency, consent mechanisms and detection standards.

The coming year will be critical: policymakers, technologists and creators must agree on principles that prevent misuse while allowing legitimate innovation to proceed.

The recent surge in AI voice-cloning incidents is more than a headline — it is a reminder that technological innovation demands parallel development of legal, ethical and technical safeguards. Protecting the integrity of voice requires coordinated action: clear laws, robust platform policies and an informed public.

Preserving voice as a personal and professional asset is now a collective responsibility.

This analysis is offered for informational purposes and does not substitute for professional legal or technical advice. Readers should consult qualified specialists when addressing AI or data-protection issues.

Kazakhstan Boosts Oil Supply as US Winter Storm Disrupts Production

Oil prices inch down as Kazakhstan's oilfield ramps up production, countered by severe disruptions f

Return of Officer's Remains in Gaza May Open Rafah Crossing

Israel confirms Ran Gvili's remains identification, paving the way for the Rafah border crossing's p

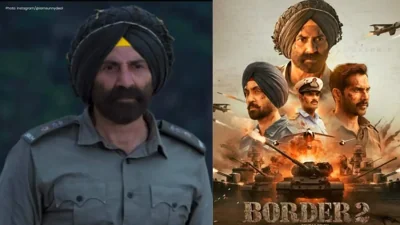

Border 2 Achieves ₹250 Crore Globally in Just 4 Days: Sunny Deol Shines

Sunny Deol's Border 2 crosses ₹250 crore in 4 days, marking a significant breakthrough in global box

Delay in Jana Nayagan Release as Madras HC Bars Censorship Clearance

The Madras High Court halts the approval of Jana Nayagan's censor certificate, postponing its releas

Tragedy Strikes as MV Trisha Kerstin 3 Accident Leaves 316 Rescued

The MV Trisha Kerstin 3 met an unfortunate fate near Jolo, with 316 passengers rescued. The governme

Aryna Sabalenka Advances to Semi-Finals, Targeting Another Grand Slam Title

Top seed Aryna Sabalenka triumphed over Jovic and now faces Gauff or Svitolina in the semi-finals as