Post by : Anees Nasser

Artificial intelligence is advancing at a remarkable pace, evolving from experimental tools to vital components in banking, healthcare, education, and beyond. Whether it's approving loans or managing recommendations, your interactions online increasingly rely on AI.

However, this convenience brings concerns.

Data breaches have plagued users for years. Now, a pressing question arises: what are the implications when software—not just humans—oversees sensitive information? Will failures increase? Will accountability diminish?

This is no speculative worry; it’s the catalyst for a global initiative striving to reform how AI systems are developed, monitored, and governed. Over the next couple of years, policies will be introduced to bolster the strength and security of AI infrastructure.

Ultimately, the pivotal question remains for most users:

Will your personal data be genuinely safeguarded by 2026, or is this just another empty promise?

AI infrastructure encompasses more than mere coding. It includes:

Data storage centers

Server systems

Cloud-based services

Platforms for machine learning

Training environments

Model deployment mechanisms

Backup and redundancy solutions

Security monitoring tools

In essence, AI infrastructure serves as the essential framework hosting innovative systems. Ensuring AI security translates to securing:

Data storage locations

Access permissions

Data processing methods

Data flow

Breach detection speed

Communication during incidents

In the absence of robust standards, companies have relied on self-made regulations, which has proven ineffective. This realization is driving government action.

Unique features of AI systems include:

Continuous learning capabilities

Behavioral evolution

Dependence on large data sets

Unpredictable interactions

Self-directed decision-making

Unlike traditional applications that execute commands as specified, AI learns from what it hasn’t been told.

This diverges from the previous security assumptions.

Traditional security focused on:

Password security

Firewalls

Access control mechanisms

Data encryption

AI introduces new vulnerabilities, such as:

Model poisoning

Contaminated training datasets

Improper usage of AI hallucination

Automated assaults

Identity inference risks

Synthetic data leaks

Manipulation of user behavior

While human errors can be easily traced to individuals, AI errors often obscure accountability, enabling damage to spread rapidly.

For years, technological growth surpassed regulatory measures. However, the current trends indicate a strong push to bridge this gap.

Countries are implementing:

Mandatory audits for AI systems

Licensing requirements for sensitive AI applications

Frameworks for accountability

Transparency mandates for algorithms

Incident reporting policies

Laws for breach notifications

What were once guidelines are becoming enforceable laws.

New regulations are addressing:

Data collection methods

Duration of data retention

Data transfer permissions

Processing rights

Deletion timelines

Unauthorized access to data will not be viewed as a mere accident; severe penalties are becoming commonplace.

AI models will need to:

Document decision-making processes

Keep training logs

Explain output generation

Retain all version histories

Opaque black-box systems are being phased out.

It is now imperative that every AI system is verifiable.

Past technology was constructed with security as an afterthought. Current standards necessitate:

Integrated encryption

Default privacy features

Minimal data storage practices

Accountability for access

Automatic anonymization of sensitive data

Security must be prioritized before any AI tool interacts with users.

Organizations will no longer have the luxury of long investigative delays.

New standards require:

Prompt reporting of incidents

Public disclosures within hours or days

Obligation for compensations

Verification of full data deletion

Transparency is now crucial for security protocols.

Non-compliance will lead to:

Significant fines

Bans on services

Criminal investigations

Market setbacks

Brand deterioration

Security failures will no longer result in apologies; instead, they will lead to legal consequences.

The establishment of global security protocols is emerging from:

International coalitions

National cybersecurity entities

Technology regulatory bodies

Civil rights groups

Academic institutions

Leading bodies like the International Organization for Standardization and the National Institute of Standards and Technology are key to this initiative.

They play a pivotal role in creating order from disorder.

Expect a transition from intricate permissions to clearer controls.

Look for:

Simplified dashboards

Easy options for data removal

Defined timeframes for consent

Access based on necessity

Clear storage practices

The all-encompassing “agree to everything” buttons will be abolished.

AI systems will be encouraged to minimize storage of:

Outdated communication threads

Unrelated personal information

Archived profiles without user approval

Excess biometric data

Strict enforcement of data minimization will follow.

Users can anticipate developments in:

Facial recognition systems

Voice identification security

Digital identity verification

Authentication without passwords

Detection of AI-based forgeries

Defenses against deepfakes will become a standard necessity rather than a luxury.

AI lacks the intrinsic ability to value privacy.

Yet, the creators will be compelled to prioritize it.

Ethical standards will now play a non-negotiable role.

Cybercriminals are using:

AI-enhanced phishing techniques

Voice imitation scams

Fabricated videos

Automated bot infiltration

Synthesis of identities

This evolution is leading to defenses that are:

Predictive in nature

Behavior-based

Monitored in real-time

Driven by machine learning

Countermeasures are turning to AI for protection.

Businesses managing user information must now:

Appoint dedicated AI compliance personnel

Conduct system audits

Maintain comprehensive risk documentation

Document user access

Install fail-safe features

Report any breaches immediately

AI oversight is transforming into a professional role.

Neglecting regulations will:

Deter investments

Damage brand reputation

Restrict market entry

Invite legal actions

Trigger government interventions

The year 2026 won’t allow for digital negligence.

Authorities are employing:

AI-assisted audits

Cyber forensic examinations

Digital monitoring

Cross-border collaborations

Infrastructure evaluations

Digital wrongdoings won’t go unseen.

Remove unused accounts.

Cut down on oversharing.

Control permissions closely.

Evaluate:

Application configurations

Location settings

File-sharing permissions

History retention

Biometric operations

Less information translates to lesser risks.

However,

New regulations can decrease risks

Penalties enhance compliance

Architecture limits vulnerabilities

Awareness fosters correct usage

Safety advances through obligation rather than hope.

By the year 2026:

Annual AI audits will be the norm

Data breaches will be criminal offenses

User rights will be enforceable

Hidden processing will be prohibited

Consent will have real meaning

Transparency will be compulsory

Your information will persist.

But recklessness will no longer be acceptable.

The internet evolved chaotically.

AI is being molded responsibly before it spirals out of control.

Yes, but not through mere hope.

Data will be more secure because:

Governments are taking action

Stricter regulations are being enforced

Improvements in systems are underway

Accountability is becoming standard

Users are becoming more aware

However, if negligence persists, this promise will fail.

Enhancing security requires effort from both the technology developers and users alike.

AI’s capabilities will grow.

Your own security efforts must keep pace.

This piece serves as informational content and does not provide legal or cybersecurity advice. Readers should consult qualified experts for advice on data protection laws and AI governance.

Kazakhstan Boosts Oil Supply as US Winter Storm Disrupts Production

Oil prices inch down as Kazakhstan's oilfield ramps up production, countered by severe disruptions f

Return of Officer's Remains in Gaza May Open Rafah Crossing

Israel confirms Ran Gvili's remains identification, paving the way for the Rafah border crossing's p

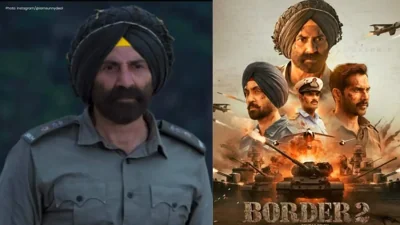

Border 2 Achieves ₹250 Crore Globally in Just 4 Days: Sunny Deol Shines

Sunny Deol's Border 2 crosses ₹250 crore in 4 days, marking a significant breakthrough in global box

Delay in Jana Nayagan Release as Madras HC Bars Censorship Clearance

The Madras High Court halts the approval of Jana Nayagan's censor certificate, postponing its releas

Tragedy Strikes as MV Trisha Kerstin 3 Accident Leaves 316 Rescued

The MV Trisha Kerstin 3 met an unfortunate fate near Jolo, with 316 passengers rescued. The governme

Aryna Sabalenka Advances to Semi-Finals, Targeting Another Grand Slam Title

Top seed Aryna Sabalenka triumphed over Jovic and now faces Gauff or Svitolina in the semi-finals as