Post by : Monika

Photo: Reuters

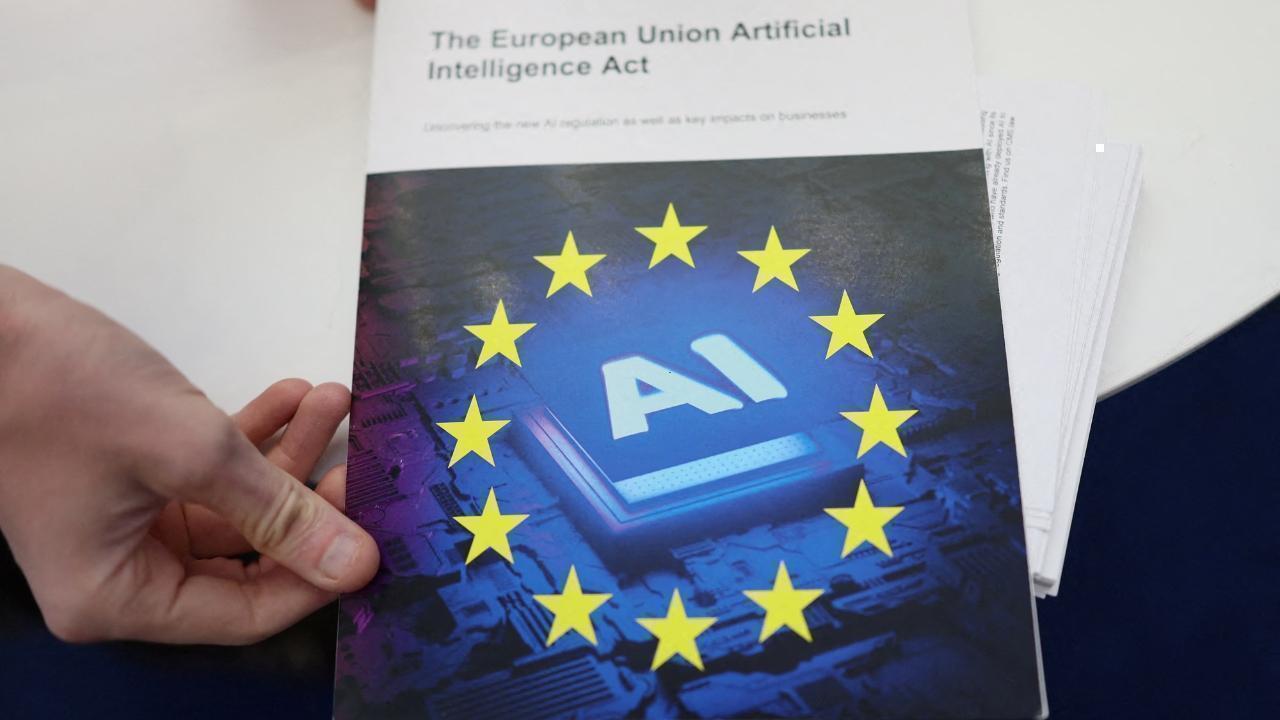

The European Union (EU) has started giving important advice to companies and creators who build AI (Artificial Intelligence) systems. This advice is about how they can follow new laws that protect people and make sure AI is safe to use. The new rules focus especially on AI systems that could cause big problems if something goes wrong.

Why Is the EU Giving These Rules?

AI technology is growing very fast. It is used in many areas like medicine, finance, transportation, and more. While AI can help a lot, it can also create risks if it is not used carefully. For example, some AI systems might make wrong decisions, show unfair results, or even harm people.

The EU wants to make sure AI is used safely and fairly. To do this, it has made new laws called the "EU AI Act." These laws say how AI systems should be built and used to avoid risks.

What Are AI Models with Systemic Risks?

Some AI systems have bigger effects than others. If they fail or make mistakes, the problems they cause can affect many people or important parts of society. These AI systems are called “AI models with systemic risks.”

For example, AI used in banks to approve loans, or AI that helps control power grids, or AI used to check important government work can have systemic risks. If these systems do not work properly, they can cause large problems for many people.

Because of these risks, the EU is especially careful about these kinds of AI.

What Does the New Advice Say?

The EU’s new advice helps AI builders understand what they need to do to follow the rules. It gives clear steps and tips on how to design AI that is safe, fair, and transparent.

Some important points in the advice are:

Risk Assessment: AI builders should carefully check where their AI could cause harm or mistakes. They must think about how serious the problems could be and who might be affected.

Data Quality: The data used to train AI must be good and balanced. If the data is wrong or unfair, the AI might make biased or incorrect decisions.

Transparency: People using AI systems should know how the AI works and what it does. This means companies should explain AI decisions clearly so users can understand them.

Human Oversight: AI should not make important decisions without people checking. Humans should be able to review and control AI decisions, especially for big risks.

Security Measures: AI systems must be protected from hackers or attacks. The advice says companies should build strong security into their AI.

Testing and Monitoring: AI models must be tested before use and regularly checked during use to find and fix problems quickly.

Who Needs to Follow These Rules?

The advice is mainly for companies and groups that create or use AI with big risks. This includes banks, hospitals, energy companies, governments, and others who use AI in important ways.

Even smaller companies must pay attention if their AI affects many people or important services.

Why Is This Important?

AI is becoming part of everyday life. We see it in voice assistants, search engines, medical diagnosis, and more. But when AI affects important decisions, it must be trustworthy.

If AI systems make mistakes, it can hurt people’s lives, cause unfair treatment, or disrupt critical services. That’s why the EU wants to make sure AI is safe and responsible.

By giving this advice, the EU helps AI makers avoid problems before they happen.

How Will This Help People?

With these rules and advice, people can trust that AI systems used around them are checked and safe. For example:

The rules are designed to protect people, so the EU takes violations seriously. This pushes companies to take responsibility and build better AI systems.

How Does This Fit with Other Global Efforts?

Other countries like the United States and parts of Asia are also working on rules for AI. The EU’s approach is one of the most detailed and strict.

By leading with clear rules, the EU hopes to set an example for safe and ethical AI worldwide.

What Is Next for AI and the EU?

AI is a powerful tool that can help many parts of life, but it also comes with risks. The European Union is making sure that AI systems with big effects follow strong rules to keep people safe and treat them fairly.

With clear advice and laws, the EU wants AI to be trustworthy and used in ways that benefit everyone. This new guidance helps AI creators understand their responsibilities and how to follow the law.

For people living in the EU, this means safer AI systems and better protection from AI mistakes. It also sets a path for the future where AI and humans can work together responsibly.

Kazakhstan Boosts Oil Supply as US Winter Storm Disrupts Production

Oil prices inch down as Kazakhstan's oilfield ramps up production, countered by severe disruptions f

Return of Officer's Remains in Gaza May Open Rafah Crossing

Israel confirms Ran Gvili's remains identification, paving the way for the Rafah border crossing's p

Border 2 Achieves ₹250 Crore Globally in Just 4 Days: Sunny Deol Shines

Sunny Deol's Border 2 crosses ₹250 crore in 4 days, marking a significant breakthrough in global box

Delay in Jana Nayagan Release as Madras HC Bars Censorship Clearance

The Madras High Court halts the approval of Jana Nayagan's censor certificate, postponing its releas

Tragedy Strikes as MV Trisha Kerstin 3 Accident Leaves 316 Rescued

The MV Trisha Kerstin 3 met an unfortunate fate near Jolo, with 316 passengers rescued. The governme

Aryna Sabalenka Advances to Semi-Finals, Targeting Another Grand Slam Title

Top seed Aryna Sabalenka triumphed over Jovic and now faces Gauff or Svitolina in the semi-finals as